These are difficult times for Healthcare’s C-level administrators, as there are a number of major challenges looming on the horizon, appearing as dark clouds threatening to merge into a perfect storm. First and foremost I suppose would be figuring out how to support and encourage Meaningful Use according to the July 13 release of the final Stage 1 guidelines. Still no specific use of the word “images” in the text, but the same two objectives that reference the exchange of “key clinical information” are now codified in the 14 core objectives that hospitals are required to comply with at least six months before November 30, 2011 deadline. That’s the last day for eligible hospitals to register and attest to receive an incentive payment for FY 2011.

The incentives drop for every year of delay, so in this case, delay will be expensive, and effectively cost the organization precious development money.

While one may argue whether medical images should or could be included in the term “key clinical information”, there is no argument that exchanging images with outside organizations and providers based on data copied to CDs is problematic. It’s also expensive (labor and shipping costs). No wonder then that there are now twelve vendors offering either Electronic Image Share appliances or Cloud-based Image services. Should the C-level administrators look into solving this problem at the risk of taking their eyes off of the Meaningful Use issue? If the two issues are mutually exclusive, probably not.

Perhaps the darkest cloud on the horizon, because it is associated with hundreds of thousands of dollars in service fees, is the upcoming PACS data migrations. This cloud might appear to many as faint and unspecified, but make no mistake…it is there, it is coming, and it is going to be bad. Once again, should the C-level administrators spend time worrying about future data migrations, when there is only a year left to get the Electronic Health Record system up and running and meeting those Stage 1 objectives? If these two issues are mutually exclusive, probably not.

Here’s another important date bearing strong negative implications…2015; the year when Medicare payment adjustments begin for eligible professionals and eligible hospitals that are NOT meaningful users of Electronic Health Record (EHR) technology. “Adjustments” is political nice-nice for lowered reimbursements. Medical Images will most certainly be a stated inclusion in the Meaningful Use criteria by that time.

One way to look at the big picture is that there are a maximum of four years of financial incentives available for hospitals that can demonstrate support of Meaningful Use of key clinical information, for every year of eligibility. Deploying an IT and Visualization infrastructure over a five year period that will ultimately deliver all of a patient’s longitudinal medical record data to the physicians and caregivers is going to be expensive. It makes perfect sense to develop a Strategic Plan that goes after every bit of incentive funding available. That plan should and can weave all of the looming challenges into a single cohesive step plan. The aforementioned challenges are not mutually exclusive.

If one takes the position that electronic sharing of medical images outside of the organization is supportive of Stage 1 objectives, Step 1 of the Strategic Plan would be to deploy an electronic Image Share Solution. Whether that solution is an on-site, capitalized appliance or a Cloud-based service is another discussion, as the pros and cons are very organization-specific. Just make sure that the solution has upgrade potential, and is not a dead-end product.

By mid 2011 it’s time to start deploying Step 2 of the Strategic Plan…image-enabling the EHR. This might seem like an early jump on the image access issue, but we don’t know if specific mention of images will show up in the core objectives for Stage 2 or Stage 3, so why risk having to scramble to catch up? Perhaps the easiest way to image-enable the EHR would be to deploy a standalone universal viewer (display application). There are already a number of good universal viewers that require minimal server resources, feature server-side rendering, and require zero or near-zero client software. The IT department develops a simple URL interface between the EHR Portal and the universal viewer, and then individual interfaces between the universal viewer application and all of the image repositories in the enterprise (i.e. PACS). Ah but there’s the rub. All those PACS interfaces are going to be expensive to develop and maintain and replace with each new PACS, and there is no assurance that the universal viewer will be able to interpret all the variances in those disparate PACS headers.

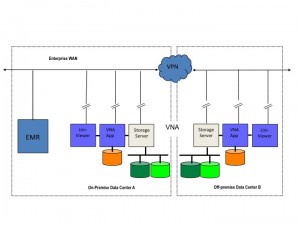

Those of you that have been following my posts on this web site, see where this is going. The best solution, certainly the best long-term solution, is the deployment of a PACS-Neutral Archive and an associated Universal Viewer (aka UniViewer). The EHR is not designed to manage image data, relying instead on interfaces between its Physician Portal and the various established image data repositories in the enterprise. The PNA solves most of the organizations data management problems by consolidating all of the image data into a single “neutral” enterprise repository, which directly supports and encourages Meaningful Use of all the data objects that will constitute the patient’s longitudinal medical record. The problem is, most organizations will not be prepared to deploy a PACS Neutral Archive in 2011, so this would be a bit much to schedule for Step 2.

My Step 2 would be to expand the Image Share solution from Step 1 to include more storage…enough storage to accommodate the image data that the organization will start migrating from each of its department PACS. Of course this would mean making sure that the Image Share solution that is chosen in Step 1 was capable of becoming a PACS-Neutral Archive. At a minimum it would have to support bi-directional tag morphing. By the time the organization has completed the migration of the most recent 12 to 18 months of PACS image data, it will be possible to support Meaningful Use of the most relevant image data both inside and outside the organization. It is important to appreciate that the set of features/functions of a PACS-Neutral Archive required to meet the objectives of Step 2 (while the data is being migrated) is a fraction of the full set of PNA features/functions, so the cost of the software licenses required for Step 2 should be a fraction of the cost of the licenses for a complete PNA. Fortunately there are a few PNA vendors that appreciate this subtlety.

Step 3 could occur out there sometime beyond 2012, when the organization has sufficient funds approved to turn on all of the features and functions of a PNA, and purchase sufficient storage to accommodate all of the enterprise’s image data.

In this Strategic Plan, all of the major challenges looming over the horizon that have to do with images are addressed and solved in three creative yet logical Steps. Using the infrastructure to support and encourage Meaningful Use, in turn qualifies the organization for significant financial incentives that should go a long ways toward financing the Plan.